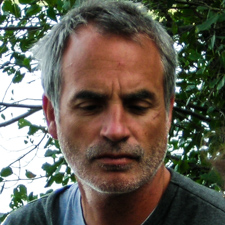

John Cayley makes digital language art, particularly in the domain of poetry and poetics. Recent and ongoing projects include How It Is in Common Tongues, a part of the The Readers Project with Daniel C. Howe (thereadersproject.org), imposition with Giles Perring, riverIsland, and what we will. Cayley is Professor of Literary Arts at Brown University.

Authors | John Cayley

Articles on Amodern by John Cayley

THE TRANSLATION OF PROCESS

There are practices of literary art distinguished by the compositional use of software. As a constitutive aspect their writing, computation may generate text from a store of linguistic elements or reconfigure previously composed texts. When asked to translate a work of this kind, we are confronted by actual ‘prior inscriptions,’ programs, that played some part in its writing. What critical or interpretative priority should we give to these inscriptions? Should we translate these programs? If so, how and when? "The translation of process," however, addresses liminal cases of works that are accepted as literary, possess existing translations, and which also instantiate program-like processes.

PENTAMETERS

Toward the Dissolution of Certain Vectoralist Relations

That this momentous shift in no less than the spacetime of linguistic culture should be radically skewed by terms of use should remind us that it is, fundamentally, motivated and driven by vectors of utility and greed. What appears to be a gateway to our language is, in truth, an enclosure, the outward sign of a non-reciprocal, hierarchical relation. The vectoralist providers of what we call services harvest freely from our searches but we users are explicitly denied any such reciprocal opportunity, even though our aim may be to imitate, assist or to prosthetically – aesthetically – enhance: to beautify the human user.

TERMS OF REFERENCE & VECTORALIST TRANSGRESSIONS

Situating Certain Literary Transactions over Networked Services

What if I, a good human, write a program that acts like a bad robot for good reasons, for aesthetic, culturally critical reasons, or to recapture some of that superb big data lying on the other side of the mouth-threshold where the powerful indexes dwell? Well, if I do that, it’s pretty bad, and it’s against most terms of use. Big software deploys whatever robots it wishes – indexing our web pages or policing our access to its services – and will deem these robots “good” without need of justification or regulation. But any robot that you or I build and that interacts with these services is “bad” by default.