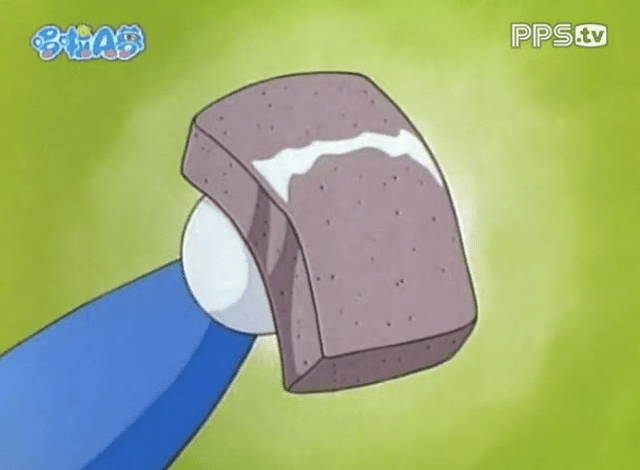

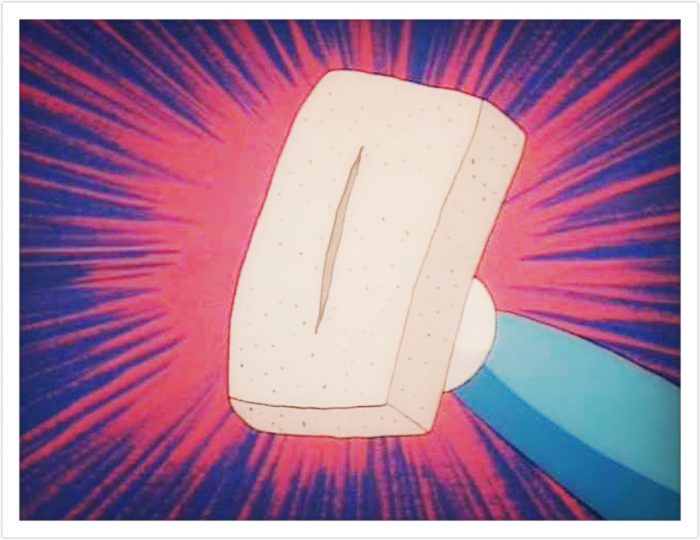

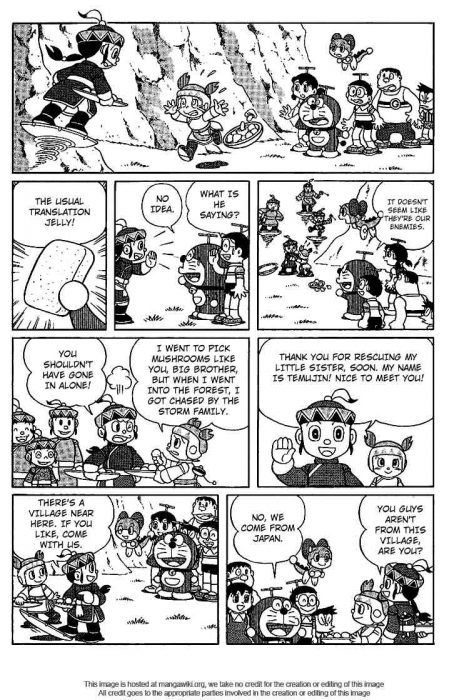

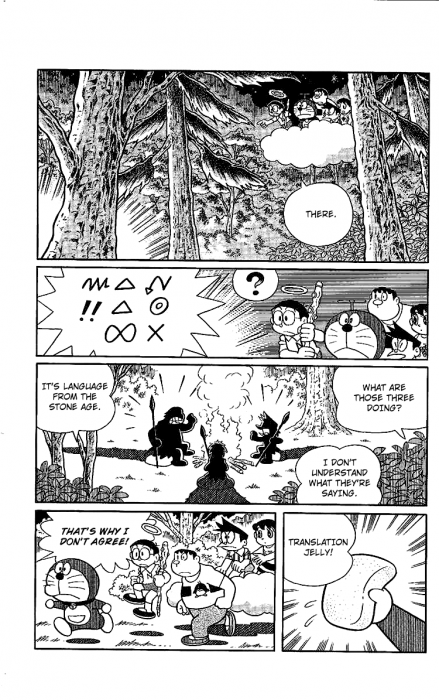

The Japanese manga and anime series Doraemon features a universal translation device in the form of a block of jelly. The block, about the size and heft of a deck of cards, is made from the corm of the konjac plant, or konnyaku, a common ingredient in Japanese cuisine, and ingesting it allows a person to understand and speak any earthly or alien language, albeit for a limited time. Though Doraemon and its fantastic technology have been in circulation since 1969, the notion of an edible translation jelly continues to resonate for the cultural imaginary of universal translation gadgets in the Anglophone West, where Douglas Adams’ Babel fish – a translating fish that dwells in one’s ear – has historically been evoked to account for the fictional side of translation’s invented machinery (as with AltaVista’s web-based translation application).1 Such whimsical translational entities may seem nostalgic at a time when the thought – and indeed the matter – of one-stop, just-in-time, in-and-out, back-and-forth translation is dominated by the machinic apparatus of Google Translate, with its complex algorithms, neural networks, and exabytes of language data.2

Even with machine translation tools ready-to-hand, and translation itself a common practice, a translator in the form of digestible comestible remains marvelously evocative. Could there be anything better than a translation jelly to symbolize translation’s mediality? Like its sibling gels from the microbiology lab – the agars, broths and nutrient suspensions of the Petri dish – translation devices are also experimental media for the growth of culture.

This issue of Amodern is interested in translation and its machinations, less in the sense of schemes and intrigues, but in the ways translation is variously machined – how it is structured, informed, imagined. Launching the exploration with a brief contemplation of the absurdity of a yam cake translator is in this respect highly suggestive. In contrast to Google, which publicly documents some of the development work on its machine translation algorithms, the Doraemon universe leaves all the operational details unspecified.3 Nevertheless, the questions we can ponder about a translator jelly are remarkably like those we might ask of a translating software (both are weird or imaginary media, after all) and use as the basis for a sketch of the general landscape of translational activity.4

To that end: Are the jelly translator’s linguistic dispensations short-lived or do they have a lasting effect on the consumer, or the environment? In what sense is the translator “universal”? How far afield are the repercussions from its activity felt? Is it limited to that which immediately confronts ears and eyes, and the emissions of tongues and bodies, or can it guide fingers with writing implements, or even writing machines themselves, and on and on, in the same ways, and with equal force? Is its magic in the jelly mixing? In the ingesting? Or does it lurk externally, in the particular circumstances of the translation act? What can we learn from our expectations about functionality and the contexts for use? Would it operate the same way for me as it would for you? For newbies and for experts? Are there counter-indications, such as intolerance, allergies, spoilage? What is its shelf-life? Where can you get it, and at what cost? Since Doraemon provides few answers, we are left to consult external sources. Not surprisingly, cooking websites say nothing about the stuff’s professed translation utility, but they do provide some interesting characterizations. The jelly reportedly has “no distinctive taste of its own,” and only absorbs the taste of its surrounding ingredients.5 Lacking the usual nutritional building blocks, the gels are merely “like the intimation of food.”6 Someone consuming a significant amount would feel full from the mucilaginous bulk, but nonetheless remain hungry. The sensation experienced is described as one of “an empty, pure fullness that’s just enough to keep you going.”7

Oddly or serendipitously enough, the descriptions – which rely on assumptions about putatively normal food, satiety, and taste in order to make sense – could just as easily be statements about the linguistic yields and nourishments of computerized translation. Mechanized translation is perhaps best known for its intimation of substance, for the hollowness of its glut, for its absorption of external resources, for its temporary gratifications. Abbreviated, machine translation even sounds out this common characterization: literally “empty,” as “MT.” Such views often extend to machine translation’s supporting systems and software programs – speech recognition, text transcription, and speech synthesis – meanwhile drawing less criticism than machine translation’s cross-linguistic escapades, which are paradoxically difficult or even practically impossible for intended users to evaluate. Did Fujiko F. Fujio choose konnyaku as a translation medium because it possessed these not-quite real qualities? Whether food or software, such peripheral assessments are full of feeling. But they leave us in the dark about how translation happens; how translational processes and performances are confronted, managed, and assessed; and what translation has and might yet become.

The projects and reflections gathered here represent an attempt to “re-set” this jelly, to recognize and open up translation’s multiple black boxes. Our contributors achieve this by revisiting, uncovering, and extending machine translation’s historical record (Dupont, Slater, Mitchell, Littau); by close reading translations, not primarily or only as hermeneutic inquiries, but as analyses of process and procedure (Cayley, Birkin, Pilsch, Milutis), and, in a similar vein; by (re)activating machine translation development and operation through prototyping, re-creating, and experimenting with its devices and programs (Raley-Huopaniemi, Montfort, Chan-Mills-Sayers). Several of the studies aim to get “under the hood” of translation in the media archaeological mode; those that do not do so explicitly still aim to get under its skin.

*

Amodern 8 expands on a spring 2015 symposium held at New York University that explored the contexts and implications of translation as mechanism, media, technique, and transmission. Our tethering of translation to machination in the title of that event, and repeated again here, is key. It marks our intention to move beyond the habit of situating MT and computer-generated language in the familiar crisis poses of fakery, treason, and inauthenticity. Rather than regarding the machine as marking the limits of translation – an assumption that risks walling off translation practice from media and communication studies concerns, while still absorbing its products – our aim is to continue to investigate the possibilities and configurations of translation as machined, and translation as machining meaning, historically and in the contemporary moment. Our hyphen connects – Translation-Machination, Machination-Translation – and neither term comes first or stands alone.

“Machination” primarily refers to devices, strategies, and methods, and that is the translational territory our contributors occupy and explore. To observe translation at all, to recognize its normalizations and its power, and render its processes and labours visible, as Lawrence Venuti encouraged with respect to literary translation and translators, is to grapple with such machinations.8 This issue interlaces these concerns with the methodological approaches and research questions of media theory, media archaeology, and the histories of media and computing, extending this quest for visibility to translational encounters explicitly of the processual, technical, and computational kind. We are informed by Katherine Hayles’s analyses of technogenesis, and the co-evolution of human with technics, alongside translation studies such as Michael Cronin’s Translation in the Digital Age and Scott Kushner’s exploration of the algorithmic logics of freelance translation labour online, all of which demonstrate that inscriptive practices including translation have always been tool-oriented endeavors.9 We also recognize that the traditional divides in professional translation schools between literary and technical translation, and now also audiovisual translation, might be seen as circumventing issues of equipment, training, and techniques. Translation-Machination, as a fundamentally interdisciplinary endeavor, musses things up, by locating and interrogating connections between genres, formats, and contexts on the one hand, and human and programming languages on the other.

The approach has its obvious forerunners with Walter Benjamin, Marshall McLuhan, and Friedrich Kittler, for all of whom translation is a theoretical cornerstone.10 At the same time, because “translation” can so easily be used metaphorically as a way to theorize all manner of signal recordings and transmissions, the term forsakes some of its practical solidity as attention shifts away from translation-as-it-happens to other media, other technologies, and other concerns. As Emily Apter has pointed out, outlooks on translation and translatability tend toward two poles: either “nothing is translatable” or everything is.11 In media-theoretical spheres, it is not uncommon to encounter gestures toward the “everything” end of the spectrum with respect to symbolic content in the spirit of Kittler’s “postmodern Tower of Babel.”12 The metaphorical borrowings apply not only to translation, but to language as well. On one side, there is the history of language projects documented by Umberto Eco, while on the other, stories such as Nofre, Priestley, and Alberts’s – on how the search for the means to migrate computer code between a burgeoning variety of commercial machines became a search for a “universal language” – are only starting to be written.13 While it can certainly be generative to expand the translational scope to other media transformations – e.g. Zak Smith’s Pictures Showing What Happens on Each Page of Thomas Pynchon’s Novel Gravity’s Rainbow14 – for the purposes of this special issue, we have chosen to retain the more precise usage. In sum: we simply still care about language, about “verbal connective tissue,” as John Guillory so expertly phrased it, as a particular kind of medium – and in our contemporary computational environments, questions about what language is, how it moves, and how it is used, manipulated, maintained, transformed, and understood seem more pressing than ever before.15

A recent characterization of translation as “quaint, a boutique pursuit from a lost world” by theorist of uncreative language Kenneth Goldsmith helps illustrate the point.16 In today’s hyper-mediated and attention-limited environment, he argues, translation is out and “displacement” is it. Where translation once cared about what was written and how, displacement simply renders, unleashing text and cultural objects from stable contexts, shunting them around, overwriting them in the language of the moment, appropriating as necessary, stockpiling, or discarding.

While Goldsmith’s narrative of a supposedly new machine mode is on the surface compelling, and even seductive, it renders “translation” as too singular an event and practice, then and now. To be convincing, the claim needs to disregard rooted and ongoing entanglements between translation, information management, publishing, and text distribution, as well as the social and political conditions and effects of such changes.17 Important counterpoints for us in this regard include Rebecca L. Walkowitz’s study of the “born-translated” novel; Eva Hemmungs Wirtén’s Cosmopolitan Copyright, which analyzes the contentious and uneven place of translation in copyright history; and Michael Gordin’s Scientific Babel, which connects machine translation development history to the search for a universal language of and for science.18 And Gitelman’s treatise on the “always, already new” is itself always essential when considering claims of revolutionary newness for medial forms and practices.19

Contrast Goldsmith’s transmedial project with This be book bad translation, video games!, a book by game-localization insiders and fan-historians Clyde Mandelin and Tony Kuchar, which gathers and presents “bad” examples from four decades of Japanese-to-English video game translations, alongside an account of translation industry adaptations in game localization.20 While comprehension lost and reconstituted is a theme of both projects, Translation-Machination is drawn to the spirit of Mandelin and Kuchar’s effort, its earnest attention to the translations themselves, and the complex and shifting constellations of factors that produce and preserve them, including software, programming practices, game development, localization, fandom, and patterns of linguistic training and expertise. Academic case studies – for example, Lisa Gitelman’s expansive read of Emoji Dick as artifact and phenomenon and Hye-Kyung Lee’s analyses of manga scanlation and anime fansubbing practices – give substance and contour to such seemingly haphazard, replaceable, and throwaway text-image practices.21 Contemporary media permit the endless creation and stockpiling of layers of translations, as Mara Mills has observed with respect to audio descriptions of moving image files online.22 However, instead of seeing this abundance of translational media, means, and activity in the digital space (automated and not) as marking an end to translation, Mills gives us rhetoric, tools, and a model with which to conceptualize what she terms the “translation overlay,” which emerges as a new mode of media use.

Not long after Goldsmith’s intervention was published, a translation conspiracy fluttered through the mediasphere about Google Translate being used, or somehow gamed, to transmit secret messages. Strings of lorem ipsum “dummy” Latin returned unexpected results in English, e.g. “China,” “NATO,” and “Chinese internet.” The Atlantic asked, “Could it be a code hidden in plain sight?”23 In turn, Translation-Machination seeks an understanding of the territory and sensibility that vacillates from seeing Google Translate, today’s go-to translator, as brainless language portal (“it’s ugly, but it works”), to seeing programmed, strategic meaning in the operation of the mechanism itself. Whether or not translation is a thing of the past – reduced to art form or artisanal practice in conditions of global reach and instant connectivity – it nevertheless gains new visibility as systems, protocols, and platforms for automated and crowdsourced translation, programming, and information exchange proliferate. Our hunch is that this range of stances and reactions does not emerge only because “conventional translation” (as John Cayley usefully terms it in this issue) is being supplanted by other processes and automations, whether linguistic or non-linguistic. Rather, machine translation renders “translation’s machinations” newly visible, in exciting and disconcerting ways. What is revealed are our efforts to negotiate the apparatus, to distinguish “operation” from “error,” and to train ourselves and be trained in tandem with our training of systems.

As such, it is important to recognize that translation occupies a special place in the history of modern computing. Not only was it the first non-numerical application attempted with computers, but it was and still is an eminently graspable domain in which dreams and devices of artificial intelligence can be conjured, commended, and cajoled by a variety of publics. Looking forward, this issue makes translation a launch pad for addressing the sites and evolving understandings of linguistic and technical exchanges within the paradigm of “natural language processing” (NLP). This catch-all research and industry term refers to any process or application that mixes language and computation, as well as that branch of computer science and artificial intelligence that focuses on such interactions. Even better for our purposes, the term is a puzzle to parse in ways that amplify and account for precisely the human-machine entanglements that interest us: here [natural language] processing, there natural [language processing]. The term’s growing scope and sweep in computer science and engineering makes it an apt borrowing for text-oriented media and literary theorists, researchers, and practitioners who also seek to describe and chart their research terrain. In this sense, NLP articulates a material space that can bring poetics together with machine learning, literary history with data mining, literacy training with software engineering, and publishing with networking. That this appellation does not always care, or frankly understand, if its products are literary, is one of the key intrigues tackled in the contributions herein and, we hope, moving forward. Ultimately, this issue aims to bring media critical and historical reflection to bear on translation’s machinations by confronting not only what might be “natural” about natural language processing, but what is natural about natural language.

*

Our banner image for this issue, as well as those that head each contribution, are photographs taken at two separate theatre performances of love.abz by Otso Huopaniemi. Much like a text run through a machine translator or a message through the telephone game, the staging of this work is never exactly the same – this linguistic morphing is as it were part of its theatrical and technical programming. As Raley and Huopaniemi explain in their dialogue, love.abz was an evolving improvisational experiment with statistical machine translation at its centre. Voice-trained and scene-fed in situ by actor-improvisers as part of the show’s unfolding “Tech Plot,” the software programs (Google Translate and the speech recognition software Dragon Dictate) in many respects execute the performance itself, both imperceptibly and onscreen, translating – in fact, writing – in response to input from the actors as well as the archive. What could be perceived uncritically as a symphony of hilarious or frustrating machine blunders becomes, for audience and creators alike, a reflexive commentary on authorship, creativity, and human-technology interactions.

While “machination” may indeed evoke schemes and intrigues in and around translation, one of our motivating claims is that there is no essential division between human and machine performance that gives rise to them. As Raley points out, not only are machines our collaborators in managing our communication, but in so doing they instill “a recognition of the human in the loop” and thus illuminate our shared subject position. She takes the opportunity in that discussion to modify Walter Benjamin’s nearly century-old take on translation and ask, “What is the task of the machine translator?” The update pertains most obviously to computational devices created in the postwar period, starting from Warren Weaver’s famous “Translation” memorandum and culminating in present-day NLP and Google’s recent successes with neural networked MT. However, our expanded interest in translations’ methods and means lets us apply Raley’s question to human-machine pre-digital periods as well, and to imagine future “machine translators” whose playing field is scripted, but perhaps not recognizably linguistic.

Quinn Dupont, for instance, investigates machine translation in the reverse direction, unearthing deep-time precursors to Weaver’s cryptanalytical proposals for the computerized translation of languages. The task of the machine translator, in this case, is cryptanalysis, as discovered in the resonance that exists between methods and machineries devised to “crack” foreign languages and ciphertext alike. Andrew Pilsch in contrast looks toward the future in his investigation of transpilers, which are utilities that interpret or compile programming languages into executable code. Regarding ES6 transpilers as machine translators of a unique but exemplary kind, Pilsch discovers an anticipatory mode in their functioning that resonates with other trends in digital writing. Created to account for the time lags that have plagued the release of programming language standards in recent years, transpilers perform translations of “future” scripts, versions of programming languages for which no standard yet exists. Transpilation, in Pilsch’s account, thus proposes and enacts a new model of translation, one that not only negotiates the conversion of human and machine codes, but also regulates our sociotechnical reality according to a new temporal rhythm.

Weaver and cryptography are showcased in Avery Slater’s analysis as well, though her concern is with postwar machine translation’s technocratic management of linguistic difference and literary responses thereto. While machine translation and computer-generated literature are cut from the same cloth, she suggests the former’s machinery inculcates a regime of “crypto-monolingualism” in the guise of “universal” linguistic plurality, while the latter, in re-programming computational routines as writerly interrogations, furnish critiques against such fantasies of homogenization. Nick Montfort’s engagement with early machine translation research makes a productive frisson with Slater, as it makes tighter connections between literature and computer engineering. As part of his re-creation of a 1961 sentence-generation program by Victor H. Yngve, Montfort identifies a stream (or, indeed, a loop) of historical predecessors, both people and programs, as his creative and technical collaborators. Despite the non-literary aims of Yngve’s original program, Montfort claims the researcher “fired a poetic probe into language.”

For several contributors, translation provides an opportunity and incitement to do – to create, to copy, to version – in addition to reading and reflecting. For Chan, Mills, and Sayers, machine translatability is not only a cross-lingual matter, but pertains to readability across media and readerships. Alongside archival research, the group prototyped an optophone, a device that converts type into audible tones for use by blind readers, to better understand the reading experiences and design contributions of Mary Jameson, an early optophone reader. The study sheds an especially bright light on learning as a necessary and often laborious link in the chain of machine operations, as well as on the way user insights contribute to device design and technical and promotional innovations.

The relations among labour, innovation, and adoption also figure into Christine Mitchell’s look at the emergence of statistical machine translation around 1992, based on methods developed at IBM. While researchers both celebrated and fretted the percentage error that necessarily accompanied the shift to probabilistic techniques, a third and parallel push envisioned niches for machine translation that delivered exactly what the machine produced, with the expectation that people would pick up the slack by learning languages themselves, or becoming translators, with the assistance of those same devices. Since we are witnessing yet another watershed moment as machine translation shifts from statistical systems to machine learning, it is worth considering whether similar expectations are in play regarding human-learners-in-the-loop.

IBM’s place in NLP history and lore cannot be denied, particularly when it comes to comparing the capabilities of humans and machines and valuing their epistemological contributions to language processing tasks. A remark attributed to IBM’s Fred Jelinek – “every time I fire a linguist my system improves” – has become the model phrase. Conceptually, NLP is the terrain on which the interminable debate between big data insights and human intuition plays out. Striving for a more nuanced and comprehensive viewpoint, Translation-Machination approaches such questions from protocological, procedural, and format theory points of view. Intuition and creativity are never banished from laboratories of either artistic or technological bent. The interest of several contributors in this regard, therefore, is to document these calibrations as decision processes that shape translations as material artifacts – and that are modulated or revealed by media formats, performance assessments and standards, administrative rules, and institutional policies.

Translators are readers first, and any translation – human or machine rendered – is thus not always or only a translation of or into a language, but is also a recovery of and negotiation with the technics of prior inscription. In Cayley’s words, it is a “translation of process.” Cayley centers his analysis on print-published literary works that exhibit some programmatic aspect (e.g. constraint, chance, software) in order to identify moments of dissonance and non-correspondence that result specifically from the recovery and activation, by the translator, of explicit process. More than providing a taxonomy of differences and decisions, or a template for further analysis – though these are furnished as well – Cayley’s close readings of the translation of process reassert the processual nature of translation and writing. Jane Birkin, working on the intermedial plane, mobilizes guidelines for interlingual translation to reflect on the mechanisms that regulate the translation of images to text descriptions, in the practice of archival cataloguing specifically. Birkin’s own description of a news photograph, as a case artifact, extends Cayley’s “translation of process” to the institutional realm, and serves as meta-commentary on information management and translation science claims over meaning, intuition, and creativity in the writing of descriptions, and their documentary status as derivatives.

Joe Milutis, too, reflects on translation as process in his analysis of W.G. Sebald’s Xerographic work, providing a corollary to Birkin’s piece in an engagement of text instantiated as an image of writing. Rather than arriving at or bouncing between translation’s extremities – high fidelity or meaningless noise – attending to translation’s granularity opens up onto both semiotic and material registers. As our focus turns to the machines that increasingly mediate our linguistic exchanges, an emphasis on process reminds us that conventional translation is still doing and that shifts in media and media use can register effects on the cognitive level as well. To that end, Karin Littau adds mediological contour to metaphors of translation by considering 19th-century optical media, specifically the magic lantern, in relation to translation practices. Through an analysis of Edward FitzGerald’s English translation of the Rubáiyát of Omar Khayyám, Littau considers the impact of scopic regimes on translators’ view of their cognitive work – conceived then and even now as a form of mediumistic, and hence also machinic, possession.

*

We set out speaking about konnyaku. The notion of translation jelly not only functioned as a kind of blank slate on which to project multiple versions of machined translation, but also set the stage for our acknowledgement of the limitations of the problematic that we have outlined with this collection of essays: although our concern is translation, the special issue is for the most part monolingual and monocultural. It perhaps goes without saying, but nonetheless needs to be said, that we regret not having had the occasion to engage more directly questions of translingual cultural and gender politics, such as the protocols of translational practice that work to normalize and solidify regimes of universal and “hypothetical equivalence” between world languages and cultures, or the perpetuation of gender bias as engineered into machine translation systems.24 A non-trivial consequence of these omissions is that we risk inadvertently positioning Google as the primary referent for machine translation globally, whereas non-Western and non-Anglophone practices and histories of translation, text processing, and computing absolutely need to be brought into this discussion.25 Examples of projects and studies that reach beyond our present alphabetic and QWERTY-based biases – and will we hope be a fundamental part of future explorations of “translation-machination” – include Ramsay Nassar’s development of قلب, an Arabic programming language, Brian Hochman’s work on media-technological innovations and efforts to preserve and document indigenous languages; Ben Tran’s account of the adoption of a new Romanized alphabet and print media by Vietnamese intellectuals in the colonial era; and Thomas S. Mullaney’s work on text processing typing devices imagined and developed for the Chinese written language.26 Warren Weaver’s visionary speculations about the futures of computer translation cast a long shadow over the field even now. In his notable “Translation” memorandum of 1949, which incorporates aspects of a prior letter to Norbert Wiener, he reiterates: “it is very tempting to say that a book written in Chinese is simply a book written in English which was coded into the ‘Chinese code.”27 Weaver was tempted by a particular cryptographic imaginary which, not unlike konnyaku, made claims to the universal that are importantly challenged by researchers studying translations across different writing systems.

To keep with Weaver’s language of temptation, our own is to regard this special issue as demonstrating the directions the study of translation and machination might go. At the same time, there is still, we think, a temptation in media studies circles to allow the widening interest in media archaeology and technical analysis – and the critical, creative tinkering and making that often accompany them – to supplant questions about the operations of language. It is our hope, then, that this focus on Translation-Machination, undertaken in the spirit of those same investigations, will re-center such questions into media study in reinvigorated form, and generate rich historicist and interpretative work that does not see media and language as opposing values, but digs even more deeply into their entanglements.

Babelfish, https://www.babelfish.com/. ↩

Note however Google’s newly released Pixelbuds promise to actualize the Babel fish dream with a prosthetic supplement: earphones with the neural network functionality of Google Translate. ↩

“Machine Translation,” Research at Google, https://research.google.com/pubs/MachineTranslation.html. ↩

Following Jussi Parikka, “the question of imaginary media is: what can be imagined, and under what historical, social and political conditions? What are the conditions for the media imaginaries of the modern mind and contemporary culture, and, on the other hand, how do imaginaries condition the way we see actual technologies?” Jussi Parikka, What is Media Archaeology? (Minneapolis: University of Minnesota Press, 2012), 47. ↩

“Foodie Fridays – Classic Japanese Pork Stew,” December 6, 2013. http://www.dinomama.com/2013/12/foodie-fridays-classic-japanese-pork.html. ↩

Chichi Wang, “Seriously Asian: Konnyaku Recipe,” n.d. http://www.seriouseats.com/recipes/2010/04/seriously-asian-simmered-konnyaku-with-beef-recipe.html. ↩

Chichi Wang, “Seriously Asian: Konnyaku Recipe,” n.d. http://www.seriouseats.com/recipes/2010/04/seriously-asian-simmered-konnyaku-with-beef-recipe.html. ↩

Lawrence Venuti, The Translator’s Invisibility: A History of Translation (New York: Routledge, 1995). ↩

Katherine N. Hayles, How We Think: Digital Media and Contemporary Technogenesis (Chicago: University of Chicago Press, 2012); Michael Cronin, Translation in the Digital Age (New York: Routledge, 2012); Scott Kushner, “The freelance translation machine: Algorithmic culture and the invisible industry,” New Media & Society 15.8 (January 2013): 1241-1258. ↩

Walter Benjamin, “The Task of the Translator,” in Illuminations, trans. Harry Zohn; ed. & intro. Hannah Arendt (New York: Harcourt Brace Jovanovich, 1968), 69-82; Marshall McLuhan, Understanding Media: The Extensions of Man (New York: McGraw-Hill, 1964); Friedrich Kittler, Discourse Networks 1800/1900 (Stanford: Stanford University Press, 1990). ↩

Emily Apter, The Translation Zone: A New Comparative Literature (Princeton, NJ: Princeton University Press, 2005). ↩

Friedrich Kittler, “There is No Software,” in John Johnston (ed.) Literature, Media, Information Systems: Essays (Amsterdam: G+B Arts International, 1997), 147-155. ↩

Umberto Eco, The Search for the Perfect Language, (Malden, MA: Blackwell, 1997); David Nofre, Mark Priestley, Gerard Alberts, “When Technology Became Language: The Origins of the Linguistic Conception of Computer Programming, 1950–1960,” Technology and Culture 55.1 (January 2014): 40-75. ↩

See also: http://tinhouse.com/product/pictures-showing-what-happens-on-each-page-of-thomas-pynchon-s-novel-gravity-s-rainbow-4/. ↩

John Guillory, Cultural Capital: The Problem of Literary Canon Formation (Chicago: University of Chicago Press, 1993), 264. ↩

Kenneth Goldsmith, “Displacement is the New Translation,” Rhizome (June 9, 2014), http://rhizome.org/editorial/2014/jun/9/displacement-new-translation/. ↩

It is perhaps worth noting that the claim is also ironically undercut by the repackaging of the Rhizome.org polemic “against translation” as an 8-volume, 8-language, professionally translated box-set issued by boutique publisher, Jean Boîte. ↩

Eva Hemmungs Wirtén Eva, Cosmopolitan Copyright: Law and Language in the Translation Zone (Uppsala: Meddelanden från Institutionen för ABM vid Uppsala universitet Nr 4, 2011). ↩

Lisa Gitelman, Always Already New: Media, History, and the Data of Culture (Cambridge: MIT Press, 2006). ↩

See also Mandelin’s Legends of Localization, https://legendsoflocalization.com/. ↩

Lisa Gitelman, “Emoji Dick and the Eponymous Whale,” IKKM Lectures (June 15, 2016), https://vimeo.com/171102394; Hye-Kung Lee, “Participatory media fandom: A case study of anime fansubbing,” Media, Culture & Society 33.8 (2011): 1131-1147; Hye-Kung Lee, “Between fan culture and copyright infringement: manga scanlation,” Media, Culture & Society 31.6 (2009): 1011-1022. ↩

“I want to propose that audio description is part of a growing category of media use, the ‘translation overlay,’ in which alternative content is added to source material without creating a new work.” Mara Mills, “Listening to images: audio description, the translation overlay, and image retrieval,” The Cine-Files 8 (Spring 2015). http://www.thecine-files.com/listening-to-images-audio-description-the-translation-overlay-and-image-retrieval/. ↩

Rose Eveleth, “A Secret Code in Google Translate?” The Atlantic, August 20, 2014. https://www.theatlantic.com/technology/archive/2014/08/a-secret-code-in-google-translate/378864/. ↩

Lydia H. Liu, Tokens of Exchange: The Problem of Translation in Global Circulation (Durham: Duke University Press, 2000). On the issue of gender bias in MT, see https://genderedinnovations.stanford.edu/case-studies/nlp.html#tabs-2. ↩

Work on postcolonial computing and postcolonial histories of computing suggest one route forward for the study of machine translation. See Lily Irani, Janet Vertesi, Paul Dourish, Kavita Philip, Rebecca E. Grinter, “Postcolonial computing: a lens on design and development,” Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 1311-1320; Fabian Prieto-Ñañez and Bradley Fidler, “Postcolonial Histories of Computing,” IEEE Annals of the History of Computing 38.2 (April-June 2016): 2-4. ↩

Brian Hochman, Savage Preservation: The Ethnographic Origins of Modern Media Technology (Minneapolis: University of Minnesota Press, 2014); Ben Tran, Post-Mandarin: Masculinity and Aesthetic Modernity in Colonial Vietnam (New York: Fordham UP, 2017); Thomas S. Mullaney, The Chinese Typewriter: A History (Cambridge: MIT Press, 2017). ↩

Warren Weaver, “Translation” (1949), Machine Translation of Languages: Fourteen Essays, eds. W.N. Locke and A.D. Booth (Cambridge: MIT Press, 1955), 22. On Weaver’s pivotal memorandum see Rita Raley, “Machine Translation and Global English,” The Yale Journal of Criticism 16.2 (2003): 291-313; Christine Mitchell, “Unweaving Weaver from Contemporary Critiques of Machine Translation,” Canadian Association of Translation Studies Young Researchers Program, 2010. http://act-cats.ca/wp-content/uploads/2015/04/Mitchell_Unweaving-Weaver.pdf; N. Katherine Hayles, How We Think: Digital Media and Contemporary Technogenesis (Chicago: University of Chicago Press, 2012), 161-63; and Avery Slater in this issue. ↩

This special issue on Translation-Machination emerges from a symposium of the same name. The event on February 15, 2015 was organized by Christine Mitchell, with assistance from Xiaochang Li and Matthew Hockenberry, and hosted by the Department of Media, Culture and Communication at NYU as part of that department’s PROGRAM Series. The event featured presentations by Rita Raley, Xiaochang Li, Mara Mills, Dean Jansen, Christine Mitchell, Luke Stark, and Emily Apter. We would like to thank the participants and audience for their engaging contributions and reflections, as well as Lisa Gitelman, Dove Pedlosky, Carlisa Robinson, and Jamie Schuler, of MCC and the NYU English Department, for their support and organizational expertise in putting on the event. Last, we especially thank Darren Wershler and Scott Pound, Editors of Amodern, for the opportunity to showcase the symposium and share its thematic with readers; Michael Nardone, Amodern Managing Editor, for his editorial assistance and technical guidance; and the issue reviewers for their conscientious work and valuable insights. Article: Creative Commons Attribution-Non Commercial 3.0 Unported License.